The Green Paradox... How AI’s Power Hunger Threatens the Very Climate Goals It Was Meant to Save

Jim Leone

10/6/20257 min read

The Promise vs. the Paradox...

Artificial Intelligence was supposed to help us build a cleaner, smarter world. From optimizing electric grids and predicting climate impacts to streamlining logistics and eliminating waste, AI has long been championed as a tool for sustainability.

But as the world races to build and train ever-larger models, another story is unfolding, one that few policymakers anticipated. The same green policies meant to save the planet are now indirectly fueling a new wave of massive power consumption, environmental stress, and infrastructure sprawl.

The contradiction is striking: governments across the U.S., Europe, and Asia are pouring billions into clean energy initiatives while tech companies, lured by cheap renewable credits and tax incentives, erect colossal AI data centers that demand more power than entire cities.

This is the green paradox, a collision between sustainability ambitions and the digital revolution’s unrelenting appetite for energy.

The Scale of the Power Surge...

Over the past three years, AI infrastructure has grown at a pace unmatched in tech history. Data centers once designed for cloud storage and routine computing are now being retrofitted or replaced by high-density AI superclusters, each requiring enormous electrical capacity and cooling systems to support racks of GPUs running 24/7.

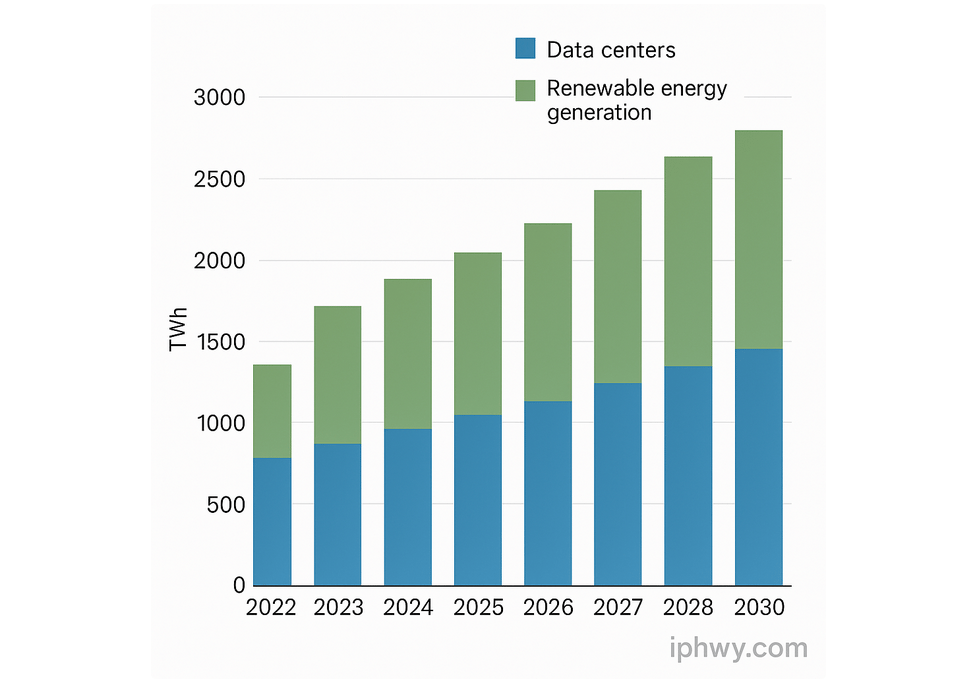

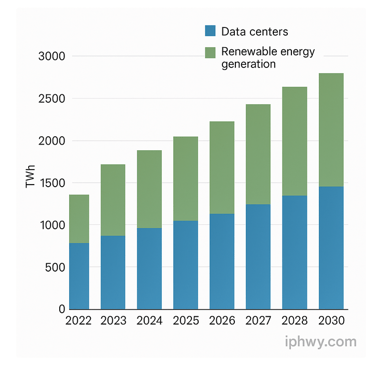

According to the International Energy Agency (IEA), global data center electricity demand is projected to double by 2030, reaching more than 1,000 terawatt-hours (TWh) annually. In the U.S. alone, data centers already consume over 4% of total electricity, half of which still comes from fossil fuels.

That translates to roughly 105 million metric tons of CO₂ emissions per year, equivalent to the output of 30 coal-fired plants.

Even a single training run for a large AI model such as GPT-4 or Gemini Ultra has been estimated to use as much electricity as 100-120 U.S. homes consume in a year, and that’s before the ongoing “inference” usage (where those models answer billions of queries daily).

AI is no longer just a software issue, it’s a power industry unto itself.

The Infrastructure Boom Nobody Planned For...

When we talk about AI expansion, we often focus on model size or innovation speed. What’s less discussed is the physical footprint of this digital boom.

Each new hyperscale data center can consume between 50 and 200 megawatts (MW) of continuous power, roughly the demand of a small town. Microsoft recently filed for approval of a single campus in Iowa expected to require 750 MW, more than the entire city of San Francisco.

To supply that power, utilities are racing to expand generation capacity, often falling back on fossil plants to meet near-term demand. The irony is glaring: while clean energy investments rise on paper, actual grid reliance on carbon-intensive sources often increases just to keep AI infrastructure running.

Cooling adds another layer of complexity. Liquid cooling and evaporative systems can use millions of gallons of water per day, often in regions like Arizona, Nevada, or Central California, areas already struggling with chronic droughts.

The “cloud,” it turns out, is deeply physical, and increasingly thirsty.

The Hidden Environmental Costs...

AI’s environmental footprint extends far beyond electricity. Each stage of the infrastructure lifecycle, from chip fabrication to construction to decommissioning, carries its own burden.

A. Manufacturing and Mining

AI chips, servers, and batteries require rare minerals such as lithium, cobalt, and palladium. Mining these materials often leads to deforestation, toxic runoff, and hazardous labor conditions.

A single data center can contain tens of thousands of GPUs and terabytes of copper cabling. The embodied carbon from this hardware, the emissions released during manufacturing, can equal decades of operational emissions.

B. Water Use and Cooling

Water-cooled systems are efficient at preventing thermal runaway, but at a cost. Google reported using over 4.3 billion gallons of water across its U.S. data centers in 2023 alone.

When those facilities are located in water-scarce regions, that usage competes directly with local communities and agriculture.

C. E-Waste and Toxic Materials

Servers are typically replaced every 3-5 years. That means a constant cycle of electronic waste, circuit boards containing lead, arsenic, and other toxic compounds.

Without strict recycling mandates, much of this waste ends up exported to developing countries or buried in landfills where contaminants seep into groundwater.

D. Air Quality and Local Pollution

Even “green” data centers require backup diesel generators for redundancy. In high-density regions, those emissions contribute to localized air pollution, creating a silent environmental inequality: communities near data center clusters bear the worst air quality impacts of our digital future.

When Green Policy Turns Gray...

Here’s where the contradiction sharpens. The U.S. government, the European Union, and states like California have enacted landmark clean-energy laws, the Inflation Reduction Act (IRA), the EU Green Deal, and California’s carbon neutrality goals.

These initiatives were designed to decarbonize industry and transportation. But none accounted for the explosive new demand coming from AI compute.

In practice, “green” policy has enabled this growth in three key ways:

A. Renewable Credits as a Loophole

Corporations can buy renewable energy credits (RECs) to offset their electricity use, even if their actual operations run on mixed or fossil grids. That means a data center powered primarily by gas turbines can still be marketed as “100% renewable”, as long as the company buys enough credits elsewhere.

It’s financial greening, not physical decarbonization.

B. Tax Incentives Fueling Private Expansion

Federal and state subsidies for renewable generation make it easier for tech giants to build or contract clean power for themselves. But that power is then diverted from the public grid.

So while headlines tout “new solar projects,” the result is often private greening and public stagnation, slowing community-wide decarbonization.

C. Regulatory Blind Spots

No environmental policy today mandates that AI workloads run on low-carbon energy. There’s no cap on emissions intensity per teraflop, no water use limit per compute hour, and no reporting requirement for embodied carbon in chips.

We regulate tailpipe emissions from cars, but not the digital exhaust of a trillion-parameter model.

The Human Side of the Equation...

While AI infrastructure is abstract to most people, its local impacts are very real.

Rising Energy Costs

As utilities expand capacity to support data centers, costs are passed to consumers. In Virginia, home to the world’s largest data center hub, electricity rates have already risen in some counties due to new transmission projects driven by AI growth.

Water Scarcity

Residents near data center clusters often face restrictions while tech firms receive exemptions. In parts of Arizona, municipal leaders have been forced to choose between residential water allocation and industrial data cooling demands.

Air and Noise Pollution

Backup generators and constant mechanical cooling systems emit not only CO₂ but also NOx and particulate matter. They run loud, often 24/7, altering local soundscapes and degrading nearby air quality.

Economic Imbalance

The economic benefits of data centers are uneven. They generate limited long-term jobs (most are automated) but impose long-term resource costs. The environmental externalities are socialized; the profits are privatized.

The same communities promised “AI-driven progress” may soon face higher utility bills, depleted aquifers, and hazier skies, all in the name of digital intelligence.

The Political Dilemma...

Governments face a tough calculus. AI infrastructure is now seen as critical to national competitiveness and defense. No politician wants to be the one accused of “slowing innovation” or “ceding AI leadership to China.”

As a result, policymakers are walking a tightrop, publicly promoting green energy while quietly approving massive power expansions to keep hyperscale computing viable.

Some even rationalize it by claiming that AI will eventually help solve climate change, through predictive analytics, grid balancing, or carbon capture modeling. But that’s a theoretical future benefit, while the emissions cost is immediate.

This “future justification” narrative is dangerous. It mirrors the same logic used by past industries that promised progress while externalizing environmental damage, oil, plastic, even nuclear expansion without waste planning.

The difference this time is that the byproduct isn’t smoke or sludge, it’s invisible electrons and data packets. But the consequences are just as tangible.

The Path to Reconciliation...

To avoid turning the green transition into a digital contradiction, we need both policy reform and technological responsibility. Here’s what can, and must, change:

Carbon-Aware Computing

Tech companies should be required to align compute scheduling with renewable availability. That means training large models during periods of solar or wind surplus, and dynamically throttling during fossil-heavy grid hours.

Google and Microsoft have both piloted early versions of this concept. Making it mandatory industry-wide would reduce net emissions without halting innovation.

Energy Addition, Not Subtraction

Governments should require that any new data center project adds renewable capacity equivalent to or greater than its total load, not just buys existing supply.

That ensures AI growth expands the clean energy pie rather than cannibalizing it.

Water and Resource Accountability

Cooling water use should be transparently reported and regulated by local water boards, with mandatory efficiency ratios. Facilities in drought-prone regions should be required to use closed-loop or air-based cooling technologies, even at higher upfront cost.

Embodied Carbon Auditing

Hardware and construction emissions must be factored into sustainability metrics. That includes mining, manufacturing, shipping, and end-of-life disposal.

True sustainability isn’t just about what powers your servers, it’s about what built them.

Grid Modernization and Smart Siting

Data centers should be strategically located in regions with abundant renewable generation and low water stress, for example, near hydro or offshore wind corridors.

Governments can incentivize this via zoning, tax credits, or grid interconnection fast-tracks.

Mandatory Transparency

All major AI infrastructure operators should disclose-->

Total electricity consumption (MWh)

Source mix (renewable vs fossil)

Cooling water use (gallons per MWh)

Backup fuel emissions

Hardware refresh rates and disposal practices

If a company can report quarterly profits to shareholders, it can report its environmental footprint to the public.

The Deeper Question... What Kind of Intelligence Are We Building?

At its core, this isn’t just a policy or technology debate, it’s a moral one.

We are teaching machines to simulate human thought, but are we teaching ourselves to think responsibly? The pursuit of intelligence without conscience is how civilizations lose their balance, when innovation outpaces introspection.

There’s a growing belief that technology can “solve” any problem we create. Yet climate change is not a math problem; it’s a moral equation. If the cure for inefficiency becomes a new driver of environmental harm, then we haven’t solved anything, we’ve just shifted the cost to another part of the planet, or another generation.

The goal isn’t to stop AI, it’s to build it with discipline and ecological awareness. To make intelligence that respects the systems that sustain life, not one that quietly undermines them in the name of progress.

The Intelligence We Need Now...

AI has the potential to transform humanity, but only if it doesn’t deplete the very resources we depend on to survive.

The world’s most powerful companies and governments cannot continue to promote sustainability while constructing megastructures that drain the grid, dry rivers, and inflate emissions under the guise of innovation.

We must hold technology to the same environmental accountability we demand from heavy industry. Because today, the real smokestacks aren’t on factory roofs, they’re hidden behind fiber lines and cooling towers in data centers powered by yesterday’s fuels.

If we’re serious about a sustainable future, intelligence must come with humility. The machines we build to predict the future must not destroy it in the process.

The race to make machines smarter must not come at the cost of making the planet unlivable.

The IP HighWay

Stay updated with the latest IT security news.

info@iphwy.com

© 2025. IPHwy LLC. All rights reserved.